Exporting a very large table or SQL query result from a database to file(s) or another database may require non-standard approaches.

By default, all databases records from the source table are allocated in the operating memory. For some database types or used database engines, it is done immediately after opening the table; for others, the records are fetched from the server and kept in the local computer memory during the exporting. And if you do not have enough RAM to allocate all source records, you run into out of memory error, the the application crashes and you can loose some part of already exported data.

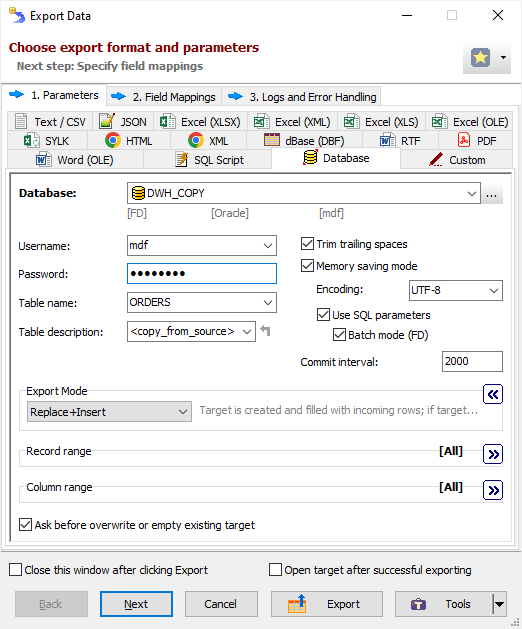

Another problem can be with the target part of your export process, if you export data to a database. If you turn off the Memory saving mode option, the exported records are allocated in the operating memory too! But this problem is easy solvable: just turn the mentioned option on.

Here are described two effective solutions you can apply in Database Tour software.

Solution 1. Using Live Data Window

Attention: It works for databases, opened by FD engine; it can be applied for tables only, you cannot use it with SQL queries.

Using Live Data Window in GUI

The solution is easy to implement, but can slow down the exporting process. The source table must be opened in so called Live Data Window mode. In this mode, only small part of source records are kept in memory. After one part has been exported, the memory is freed, and the next part of records is fetched/loaded.

If you want to do this from GUI, open your source database using FD engine, then select the needed table in the database table list. Switch to the Data page. Click Export and make regular export steps.

Note: Fetch size environment option can affect the data exporting performance. Usually, the number of records allocated in one data window equals to 2 * fetch size. Bigger parameter values can speed up the process, but too big values should be avoided. It is recommended to run some tests with different values to find the optimal one for the current databases.

Using Live Data Window in Command Line

Note: The command line functionality is available in Database Tour Pro.

If you want to export a large table in Live Data Window mode from the command line, it is important to use the following parameters:

- /SrcDBInterface=FD

- /SrcTableName=your_table_name

- /FetchSize=your_fetch_size (optional)

Here is an example of the command line to export a table from SQLite to Oracle in Live Data Window mode:

dbtour.exe /export /ExportType=DATABASE /ExportMode=REPLACE+INSERT /CommitInterval=100 /MemorySaving /UseSQLParameters /UseBatchMode /FetchSize=200 /SrcDBInterface=FD /SrcDBKind=FILE /SrcDBDriver=SQLITE /SrcDB=C:\MyData\billing.db /SrcTableName=contracts /TrgDBInterface=FD /TrgDBKind=DSN /TrgDBDriver=ORACLE /TrgOSAuthentication=Yes /TrgAuthenticationMode=Normal /TrgVendorHomepath=C:\app\John\product\12.1.0\client_1 /TrgDB=dwh_prod /TrgTableName=detail.contracts

Solution 2. Using SQL to Divide the Source Data

The solution can be very efficient, but requires some preparation. The idea is to divide the source records to several parts and export them separately, each part at a time (i.e. iteratively). You have to write a SQL query for each iteration. This way, you can export either one table or even results of a complex SQL query.

The key point is choosing how to divide. The recommended way is to use the primary key, i.e. specify a filter criteria based on values in the table primary key column(s), for example:

/*1st iteration*/ SELECT * FROM MyTable WHERE Id BETWEEN 0 AND 10000;

/*2nd iteration*/ SELECT * FROM MyTable WHERE Id BETWEEN 10001 AND 20000;

/*3rd iteration*/ SELECT * FROM MyTable WHERE Id BETWEEN 20001 AND 30000;and so on.

If the source table does not have a primary key, or when you are exporting the result of a complex query, try to use some other filter criteria, which effectively selects different parts of the source records:

/*1st iteration*/ SELECT * FROM MyTable WHERE ClientName LIKE 'A%';

/*2nd iteration*/ SELECT * FROM MyTable WHERE ClientName LIKE 'B%';

/*3rd iteration*/ SELECT * FROM MyTable WHERE ClientName LIKE 'C%';and so on. Consider using indexes etc.

Some databases (e.g. Oracle, SQL Server, PostgreSQL etc) allow to effectively divide the source table by internal row numbers. Here is how to do this for SQL Server:

/*1st iteration*/ SELECT * FROM MyTable ORDER BY SomeColumn OFFSET 0 ROWS FETCH NEXT 2000000 ROWS ONLY;

/*2nd iteration*/ SELECT * FROM MyTable ORDER BY SomeColumn OFFSET 2000000 ROWS FETCH NEXT 2000000 ROWS ONLY;

/*3rd iteration*/ SELECT * FROM MyTable ORDER BY SomeColumn OFFSET 4000000 ROWS FETCH NEXT 2000000 ROWS ONLY;and so on.

Important: For the target part, in 2nd and next iterations, do not forget to specify APPEND export mode. It allows to add new records to the existing target table.

Executing via GUI

- Open the source database.

- Click New SQL Window button. Execute the next steps as many times as number of iterations you need.

- In SQL editor, type the SQL query of the current iteration and click Execute Query button.

- Click Export button.

- Switch to the Database tab and select your target database.

- Specify a target table. Turn on all available Memory saving mode options.

- Choose the correct Export mode: for the first iteration, if the target table already exists and must be cleared before the exporting, choose EMPTY+INSERT; for all other cases, choose APPEND.

- Click Export.

Consider also the performance optimization recommendations.

Executing through the Command Line

Exporting data from the command line is available only in Database Tour Professional Edition (Database Tour Pro).

You need to create a separate SQL file and a separate command line for each export iteration. So, create the SQL files with correct queries for each iteration and name them, for example, MyQuery1.sql, MyQuery2.sql, and so on, according to the numbers of iterations.

You can build your command line either manually using specification from the documentation, or in a few clicks from the GUI. In the latter case, choose Tools | Build Command Line | Export / Import Data.... Save the built command line, for example, to the .bat file.

Then, you can repeat this for each iteration. Or just copy the built command line as many times as number of iterations; in each copied command line, replace the SQL file name with correct path for its iteration and specify correct export mode as described above. Place the created command lines to the .bat file and start your export process.

Please remember, that you can also create your command lines as action files, one action file per iteration. Below, there are examples of action files for exporting a large dataset from PostgreSQL database to Firebird database:

;1st iteration

/export

/ExportType=DATABASE

;for the 1st iteration, if the existing target must be cleared, use EMPTY+INSERT export mode

;otherwise, use APPEND export mode

/ExportMode=EMPTY+INSERT

/CommitInterval=1000

/MemorySaving

/UseSQLParameters

/UseBatchMode

;source:

/SrcDBInterface=fd

/SrcDBKind=DSN

/SrcDBDriver=POSTGRESQL

/SrcDB=clients

/SrcServer=MyPgServer

/SrcPort=5432

/SrcVendorLibrary=C:\Program Files (x86)\PostgreSQL\9.6\bin\libpq.dll

/SrcSQLFile=c:\MyExportFiles\Postgres-to-Firebird\Iteration1.sql

;target:

/TrgDBInterface=fd

/TrgDBKind=FILE

/TrgDBDriver=Firebird

/TrgDBUserName=sysdba

/TrgPort=3050

/TrgProtocol=TCPIP

/TrgOSAuthentication=No

/TrgVendorLibrary=C:\Program Files (x86)\Firebird\Firebird_3_0\fbclient.dll

/TrgDB=C:\My Firebird database\CLIENTS.FDB

/TrgTableName=MyTable

;2nd iteration

/export

/ExportType=DATABASE

;for 2nd and next iterations, use APPEND export mode

/ExportMode=APPEND

/CommitInterval=1000

/MemorySaving

/UseSQLParameters

/UseBatchMode

;source:

/SrcDBInterface=fd

/SrcDBKind=DSN

/SrcDBDriver=POSTGRESQL

/SrcDB=clients

/SrcServer=MyPgServer

/SrcPort=5432

/SrcVendorLibrary=C:\Program Files (x86)\PostgreSQL\9.6\bin\libpq.dll

/SrcSQLFile=c:\MyExportFiles\Postgres-to-Firebird\Iteration2.sql

;target:

/TrgDBInterface=fd

/TrgDBKind=FILE

/TrgDBDriver=Firebird

/TrgDBUserName=sysdba

/TrgPort=3050

/TrgProtocol=TCPIP

/TrgOSAuthentication=No

/TrgVendorLibrary=C:\Program Files (x86)\Firebird\Firebird_3_0\fbclient.dll

/TrgDB=C:\My Firebird database\CLIENTS.FDB

/TrgTableName=MyTable

...;Nth iteration

/export

/ExportType=DATABASE

;for 2nd and next iterations, use APPEND export mode

/ExportMode=APPEND

/CommitInterval=1000

/MemorySaving

/UseSQLParameters

/UseBatchMode

;source:

/SrcDBInterface=fd

/SrcDBKind=DSN

/SrcDBDriver=POSTGRESQL

/SrcDB=clients

/SrcServer=MyPgServer

/SrcPort=5432

/SrcVendorLibrary=C:\Program Files (x86)\PostgreSQL\9.6\bin\libpq.dll

/SrcSQLFile=c:\MyExportFiles\Postgres-to-Firebird\IterationN.sql

;target:

/TrgDBInterface=fd

/TrgDBKind=FILE

/TrgDBDriver=Firebird

/TrgDBUserName=sysdba

/TrgPort=3050

/TrgProtocol=TCPIP

/TrgOSAuthentication=No

/TrgVendorLibrary=C:\Program Files (x86)\Firebird\Firebird_3_0\fbclient.dll

/TrgDB=C:\My Firebird database\CLIENTS.FDB

/TrgTableName=MyTable

And your .bat file should look like this:

REM 1st iteration

"C:\Program Files (x86)\Vitaliy Levchenko\Database Tour Pro 9\dbtour.exe" /ActionFile=C:\MyExportFiles\Postgres-to-Firebird\Action1.txt

REM 2nd iteration

"C:\Program Files (x86)\Vitaliy Levchenko\Database Tour Pro 9\dbtour.exe" /ActionFile=C:\MyExportFiles\Postgres-to-Firebird\Action2.txt

...

REM Nth iteration

"C:\Program Files (x86)\Vitaliy Levchenko\Database Tour Pro 9\dbtour.exe" /ActionFile=C:\MyExportFiles\Postgres-to-Firebird\ActionN.txt

See also